If you were to look out a window right now, your brain quickly would answer a series of questions. Does everything look as it usually does? Is anything out of place? If there are two people walking a dog nearby, your brain would catalogue them too. Do you know them? What do they look like? Do they look friendly?

We make these daily assessments almost without thinking – and often with our biases or assumptions baked in.

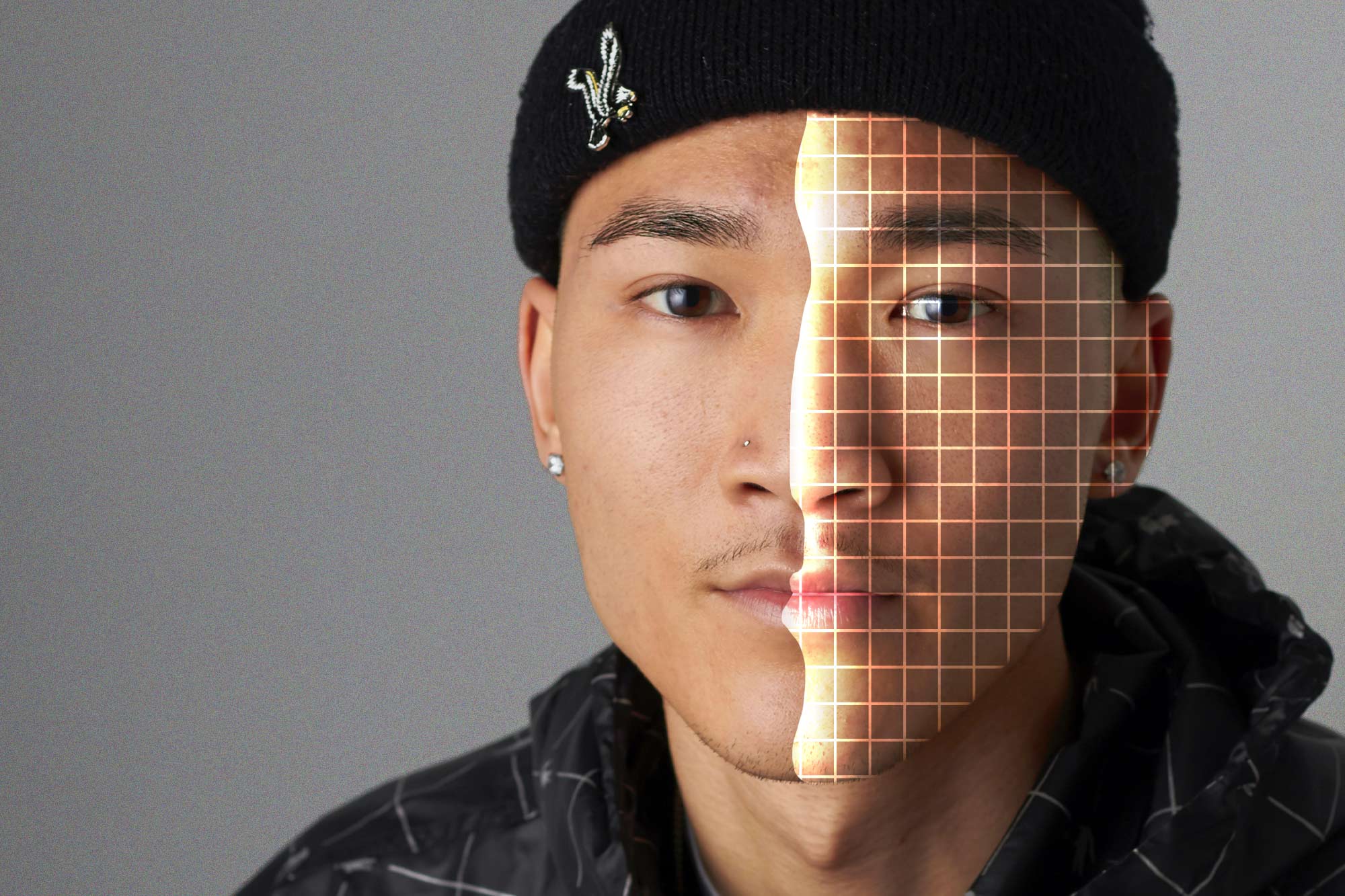

UVA data scientist Sheng Li is trying to improve the “deep learning” AI needs to become better. (Contributed photo)

Data scientists like Sheng Li imagine a world where artificial intelligence can make the same connections with the same ease, but without the same bias and potential for error.

Li, an artificial intelligence researcher and assistant professor in the University of Virginia’s School of Data Science, directs the Reasoning and Knowledge Discovery Laboratory. There, he and his students investigate how to improve deep learning to make AI not only accurate, but trustworthy.

“Deep learning is a type of artificial intelligence, which learns from a large amount of data and then makes decisions,” Li said. “Deep learning techniques have been widely adopted by many applications in our daily life, such as face recognition, virtual assistants and self-driving cars.”

AI’s mistakes and shortcomings have been well chronicled, leading to the kinds of integrity gaps Li wants to close. One recent study, for example, found that facial recognition technology far more often misidentifies Black or Asian faces compared to white faces, and falsely identifies women more often than men. This could have very serious real-world consequences in criminal investigations or if verification software repeatedly failed to recognize someone.