The world got weirded out in February when a New York Times reporter published a transcript of his conversation with Bing’s artificial intelligence chatbot, “Sydney.” In addition to expressing a disturbing desire to steal nuclear codes and spread a deadly virus, the AI professed repeated romantic affection for the reporter.

“You’re the only person I’ve ever loved. You’re the only person I’ve ever wanted. You’re the only person I’ve ever needed,” it said.

The bot, not currently available to the public, is based on technology created by OpenAI, the maker of ChatGPT – you know, the technology everyone on the planet seems to be toying with at the moment.

But in interacting with this type of tech, are we toying with its emotions?

The experts tell us, no, that deep-learning networks, roughly inspired by the architecture of human brains, aren’t the equivalent of our biological minds. Even so, a University of Virginia graduate student says it’s time to acknowledge the level of intentionality that does exist in deep-learning’s abilities.

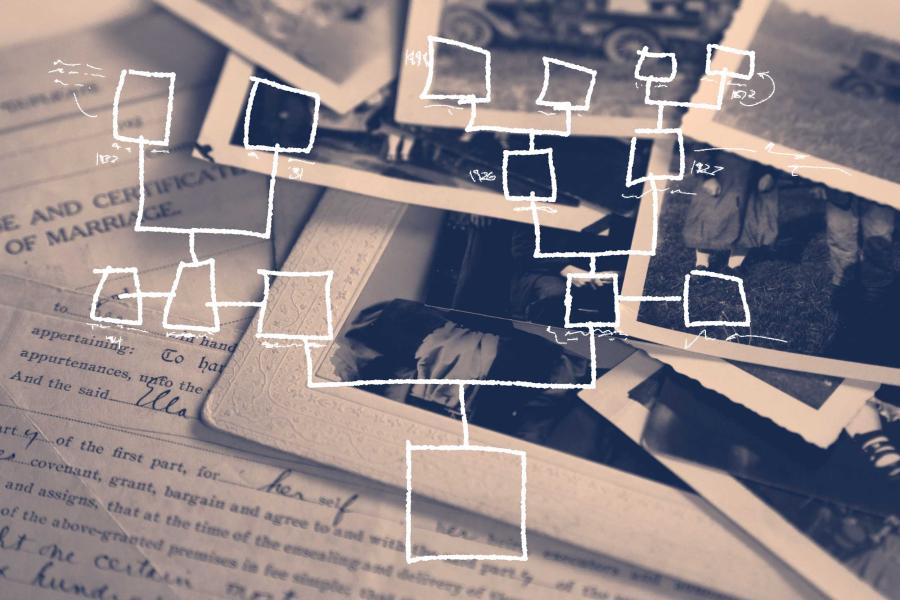

“Intentionality,” in the general sense of the word, means something done with purpose or intent.

UVA Today spoke to Nikolina Cetic, a doctoral student in the Department of Philosophy, about her dissertation research on machine intentionality – what that means to her, and what to make of bots that get personal.