The recent crash of a self-driving car that resulted in the death of a pedestrian in Arizona shows that self-driving technology is still a work in progress, and will require more rigorous testing, according to a University of Virginia researcher who studies the safety of autonomous vehicles.

However, UVA computer scientist Madhur Behl predicted these vehicles will be safely deployed in the near future, and eventually will become ubiquitous on our streets.

“My 6-month-old niece probably will never need a driver’s license,” he said. “Her car will do the driving for her.”

When self-driving cars become a common and safe reality, it will be the result of work by engineers and computer scientists like Behl, who are building the future now.

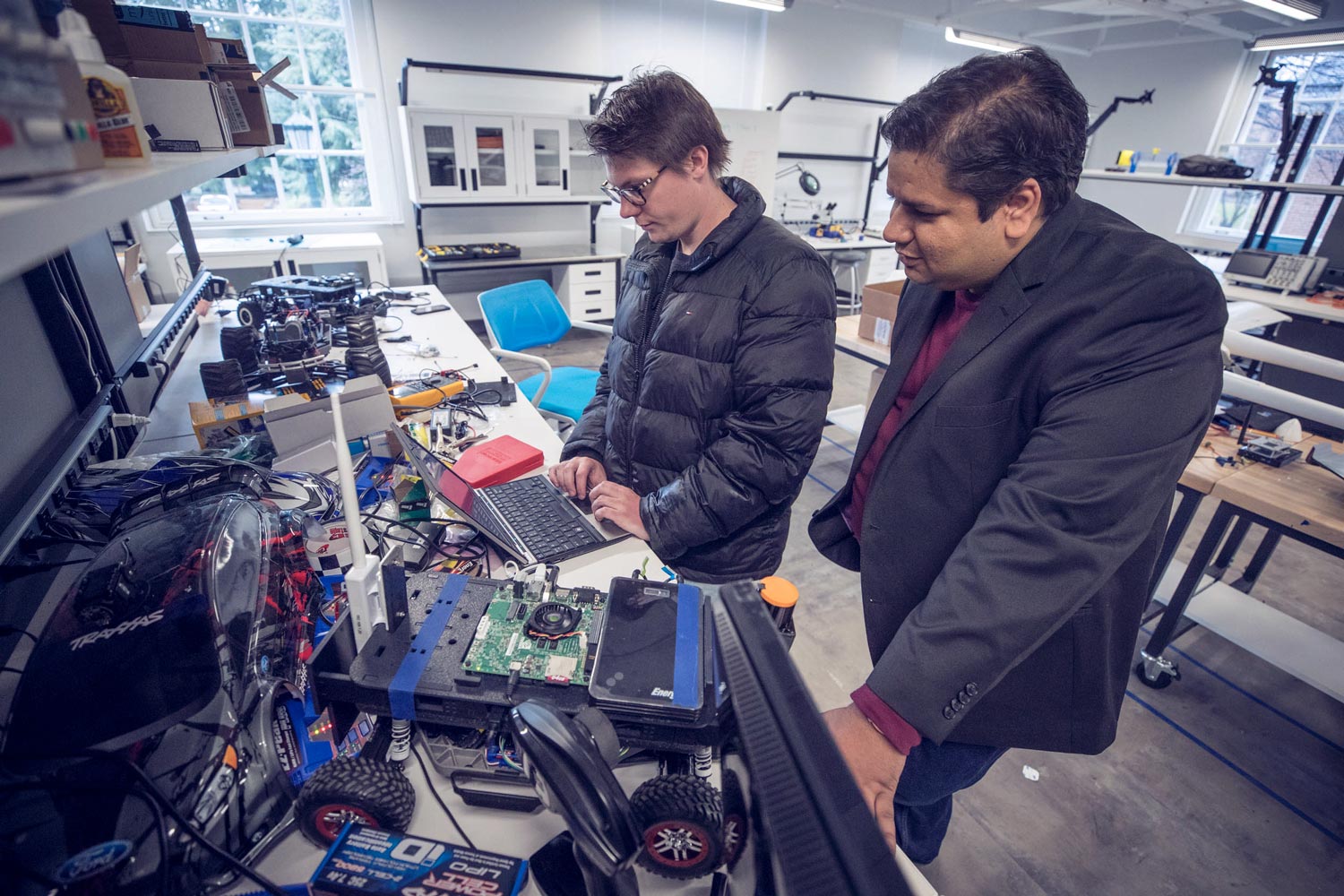

Behl, who came to UVA’s School of Engineering and Applied Science last year from the University of Pennsylvania, is using “machine learning” – essentially, teaching computers to “think” based on training from past experiences – to develop integrated sensing and computing systems that will do the driving for you, and better than you ever could. Working with his students, he is using 1/10-scale autonomous Formula 1 race car models in a miniature race track at his lab to test, train and eventually optimize control systems that can translate to full-sized cars for the road. It’s a relatively inexpensive and highly effective method.

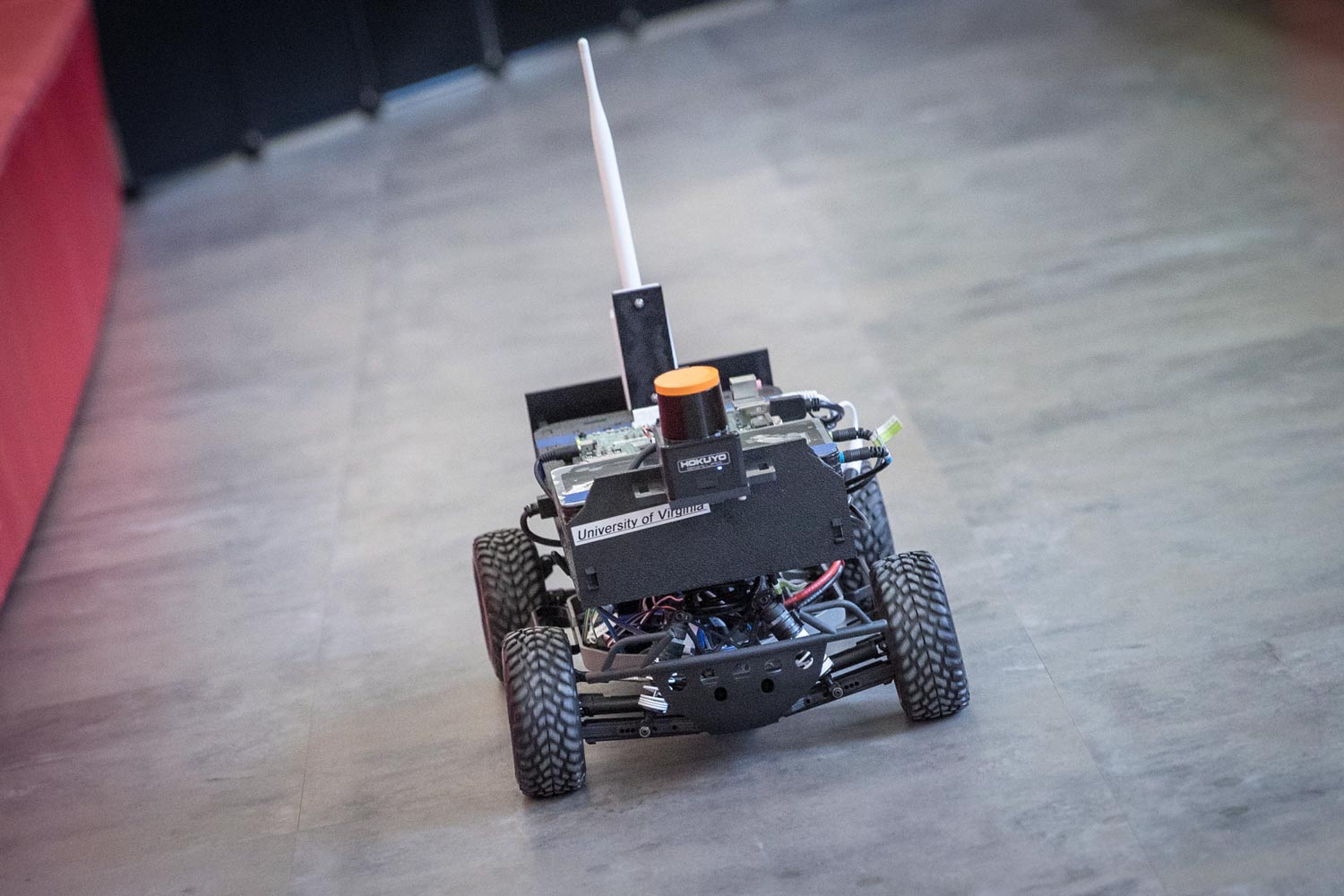

Behl is using 1/10-scale models to optimize control systems for translation to full-sized autonomous cars.

Behl describes his model cars as “1/10th the scale, but 10 times the fun!”

“The future of vehicles is autonomous,” he said. “Within about a decade, there will be no need for you to hold onto the steering wheel or to be ready to brake or accelerate at all times. Vehicles will take over the majority of the driving load, and drivers will become passengers.”

He said that while many people today feel they cannot trust a car to drive itself safely, autonomous cars will be safer than human drivers.

“These vehicles will increase the safety of driving because the system will never get drunk or tired. It won’t get distracted by a phone call or texts, and it won’t drive irresponsibly,” he said. “As a result, car crashes due to human error will drop to nearly zero.”

Sounds great, but a lot of machine learning and engineering stand between now and then. But that’s where the fun is for Behl and his colleagues – developing the technology and working through the sometimes-tedious, but integral details involved in making cars drive safely on their own.

An autonomous car model is tested on a track in the Engineering School’s Link Lab.

The vehicles are equipped with a range of sensors and computer systems to drive fast, and smart, to avoid each other, and walls and obstacles, while getting from Point A to Point B efficiently, safely and with minimal fuel (in this case, electricity, which Behl says is the vehicle fuel of the future). The sensors include multiple cameras; laser range sensors or LIDARs; radar; GPS and others that work in coordination with high-performance computers and precision steering and braking systems.

Already, autonomous vehicles are being tested on highways and proving to work well, though not flawlessly. Behl said daytime highway driving is the easy part because most of that form of driving is relatively routine, very structured and mostly obstacle-free. But it gets much more complicated when a vehicle drives at night, or enters a city, and confronts an array of potentially confounding situations – pedestrian crossings, bicyclists sharing the road and intersections with four-way stop signs.

That’s where machine learning comes in – manually training the autonomous system to recognize and operate safely in a range of scenarios, including unusual situations, such as a car ahead suddenly veering across three lanes, or an anomaly like a boulder suddenly rolling off a cliff onto the road.

“We have to try to anticipate every possible situation a vehicle could encounter, and then train the system to safely react to it,” Behl said. “However, we can never account for all possible traffic situations, no matter how many miles of training data we gather. Therefore, we are teaching our cars to react safely under unexpected traffic situations and edge cases, by developing more sophisticated machine learning algorithms which can maneuver the car at the limits of its agility, when needed.”

Behl is leading students in the design of control systems for safe, self-driving cars of the near-future.

Researchers already have solved many of the fundamentals for self-driving cars – training the vehicles to stay in lanes, to change lanes as needed, move around obstacles without running into other vehicles, maintain safe distances, to accelerate and slow to maintain pace with the flow of traffic. Some of this is already available on newer cars, such as adaptive or “intelligent” cruise control, lane-keeping assist and emergency-braking assist. The rest will be introduced in stages as the systems are developed and prove trustworthy.

That’s the whole other aspect of driverless cars – gaining the trust of passengers, and others in the vicinity of an autonomous vehicle on the road. Behl is working with another UVA researcher, computer scientist Lu Feng, to develop techniques for better understanding the role of trust in human/autonomous vehicle interactions. They conduct their work in the Engineering School’s Link Lab, a new $4.8 million, 17,000-square-foot facility that brings together researchers from five departments to collaborate on a range of big-issue, multidisciplinary engineering problems and challenges.

“Behind the wheel of a self-driving car, everybody becomes a backseat driver,” Behl said. “The autonomous car will need to inform passengers and others in various ways that the vehicle is under control, that it has situational awareness, that it can be trusted to respond properly to driving conditions, that the vehicle is predictable in behavior, not aberrant.”

During tests, automotive companies deploy safety drivers in autonomous cars to potentially take action, as needed, to help prevent crashes. But the Arizona incident demonstrates that a safety driver cannot always intervene in time. Behl said the driverless systems need to be improved at the interface of human-vehicle interactions to increase overall safety for people both inside and outside of the car.

“Initially, it will be uncomfortable for some people who have been driving all their lives to turn over control to the car, to bet their lives on it,” Behl said. “But as these systems are introduced, incrementally, and ultimately prove safe, most people will gain trust and probably come to prefer to let their vehicle handle the driving load. But we have to build that confidence by building trustworthy systems. And so, in our research, we are trying to build mathematical models to understand how trust and driving behavior varies from one person to the other.”

Eventually, he says, a new generation of passengers will emerge who never need to learn to drive. The cars will have already learned to drive, or more correctly, been taught to drive, by researchers like Behl and his colleagues.

Media Contact

Article Information

March 28, 2018

/content/teaching-cars-think-autonomous-future